“These were the last years before the birth of digital life” – this is how the next generations will discuss our time…

In this article, we will continue the conversation about individual artificial intelligence based on a neural matrix and a brain-computer interface. This time we will analyze the main mathematical component of the concept – a matrix based on a new artificial neuron.

ChatGPT and other LLMs – why are these models not ideal?

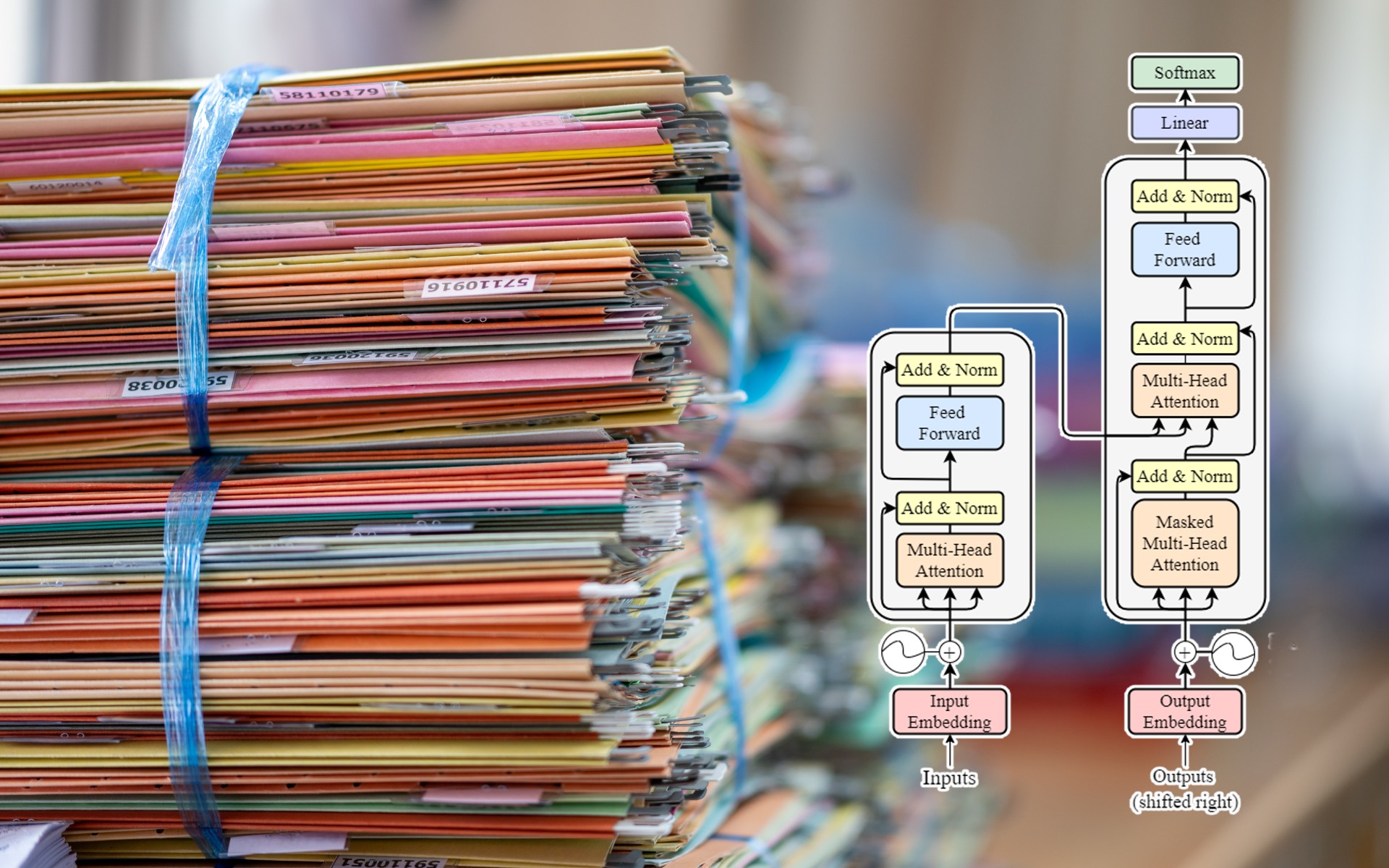

Any existing language model is a kind of black box. Developers have no other way to improve results other than using even more input data and increasing computing power.

There are several more ways to optimize using fine-tuned files (I experimented with this quite a lot in AI SYNT), but the result remains the same – if you want to get something new in the answer, load new data and just experiment with the output. When we receive the output, we do not understand whether it is a random result or a creative decision.

Why is that?

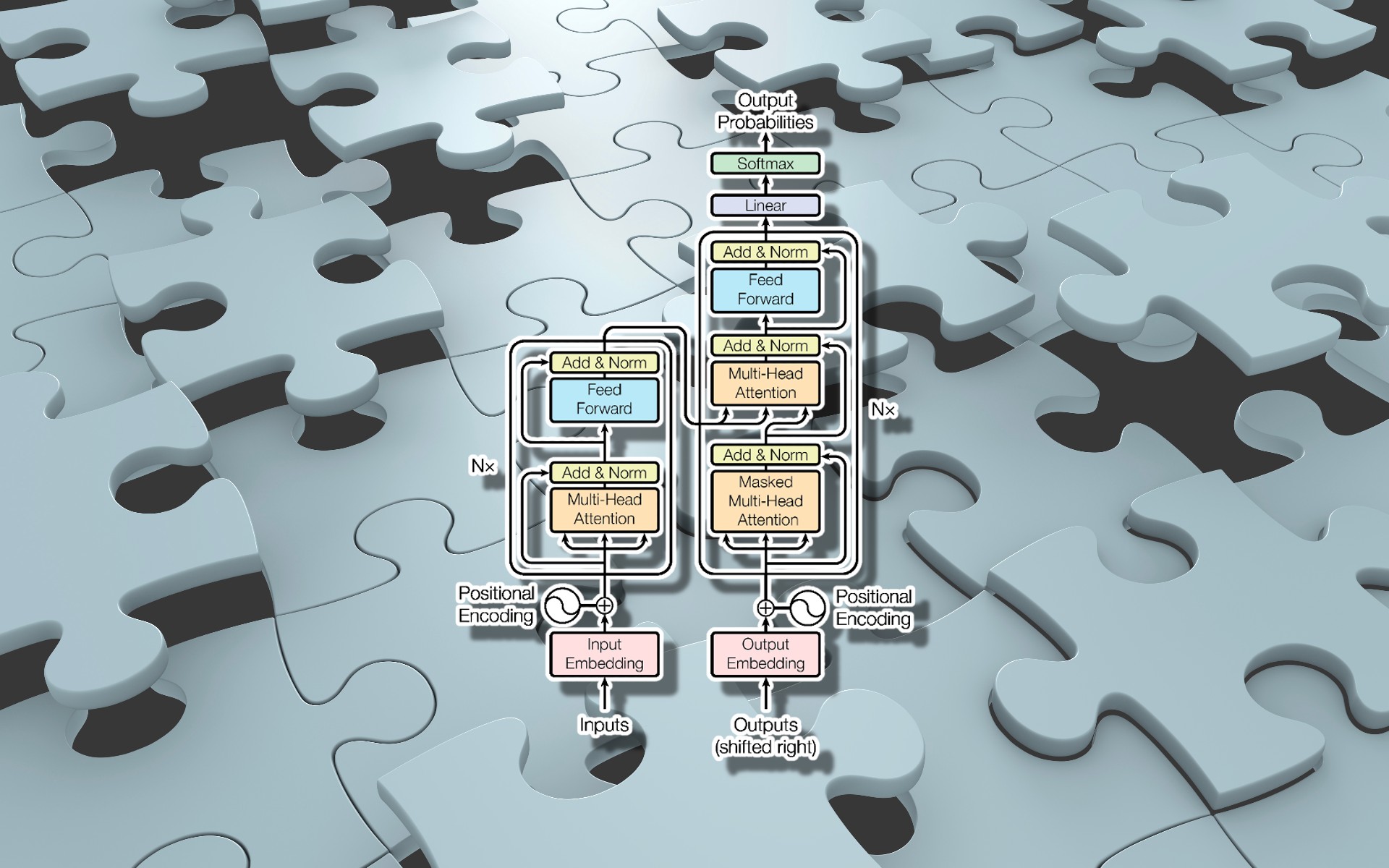

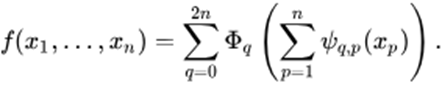

The main reason is that despite all the innovations of recent years, all known LLMs are based on the multilayer perceptron (MLP) model – an idea from the middle of the last century. The basis of MLP is learning using the weights of a mathematical neuron. Incoming data is transferred from one neuron to the next (in the next layer) only if there is a connection between neurons and the weights give a value above the threshold. For this reason, the neuron weights must be adjusted so that the network can produce a result that matches our expectations. This means that between the input and output we are trying to define a function that we generate by manipulating the weights. While learning, the neural network simply changes the weights of the neurons so that the initial data gives the desired (expected) result. From a mathematical point of view, a modern neural network does not define a function but simply approximates the result.

There is another way…

Back at the end of the last century, when at the Department of Normal Physiology, I worked with a mathematical model of synaptic transmission between neurons, one of the versions was the Kolmogorov-Arnold-Moser theory (KAM theory, created in the USSR) describing a dynamic system as a branch of chaos theory. The idea was that the living neural network of the brain is, from a mathematical point of view, a classical three-dimensional dynamic system that can be described as a complex geometric function of many points on the hypersurface of a three-dimensional matrix. In this case, each point will have its coordinate line – an individual function indicating a feature of the neuron.

Another AI or Big Idea on Standby

Despite the tempting prospects of the Kolmogorov-Arnold-Moser theory, at the end of the last century, there was a dominant opinion that it was impossible to develop a brain model (artificial neural network) based on the KAM theory since training and tuning neurons with an individual variable function required a lot of resources.

Now everything has changed. The performance of computing systems has increased so much that a small neural matrix based on KAM can even run on your personal computer.

What is the KAM matrix?

The main difference is that instead of transforming one complex function that depends on many variables, KAM works with many simple functions. In the existing MLP network, the functions are fixed, and the weights are trainable. In the KAM structure, the weight indicators depend on the parameter (X) of the previous neuron. Consequently, in the KAM matrix, the functions themselves are trained, and not just the numerical values of the weights.

To put it simply, fixed neuron activation functions and linear weights on edges in the MLP model are changed to trainable nonlinear activation functions on edges in KAM.

The Kolmogorov-Arnold-Moser theory allows you to deform the neural layer of a neural network, turning it into a matrix layer. Thus, training a KAM matrix is a change in simple functions connecting the weight and (X) parameter previous neuron. This way, we can turn a complex function into an accurate and explainable matrix deformation.

What is the result?

Despite the initial difficulty in setting up (training), the operation of the KAM matrix will be understandable and explainable, since we can track and reliably describe how the result is associated with changes in the function of each neuron.

In addition, changing the function of the neuron (instead of manipulating the numerical value of the weight) will give greater accuracy in the results with significantly smaller KAM matrix sizes than the MLP network.

Since the parameter (x) of the previous neuron means deformation, the KAM matrix will be able to retain the memory of the processed information, which means that the KAM has less need to expand the database or retrain.

What are the differences between a KAM matrix and a living brain?

If we are talking about individual AI, we must understand that a deterministic function determines the inevitable evolution of a dynamic system.

At the frequency of the KAM matrix (for example, 60 hertz), we will have a cascade, the trajectory of which will be described by an autonomous system of differential equations with a frequency of solutions – 60 positions per second.

Therefore, the main difference is that the living brain is a flow, not a cascade, and its topological dynamics are not discrete. However, considering the difference in signal speed, the flow of a living brain will still be a million times slower than the KAM matrix cascade.

Time for big changes

The paradox is that watching how AI is born, we do not understand the importance of what is happening now. We think about investments and income, worry about the redistribution of jobs, and do not understand the most important thing – a new form of life is being born before our eyes and with our participation. We are not creating a tool or technology we are building something capable of living, working, and developing faster and perhaps more successfully than ourselves.