Humans have designed a range of tools to aid us in the creation of art, and they’ve evolved dramatically over time. The creative person’s tool kit has recently grown with the addition of a formidable new tool: text-to-image generators powered by artificial intelligence.

The possibilities of what this novel technology can be used to create are in many ways endless, but that wide range of potential comes at a cost. While some images — whether cartoon-like doodles or highly realistic scenes that resemble real photographs — may be creative or inspiring, others could in some cases be harmful or dangerous.

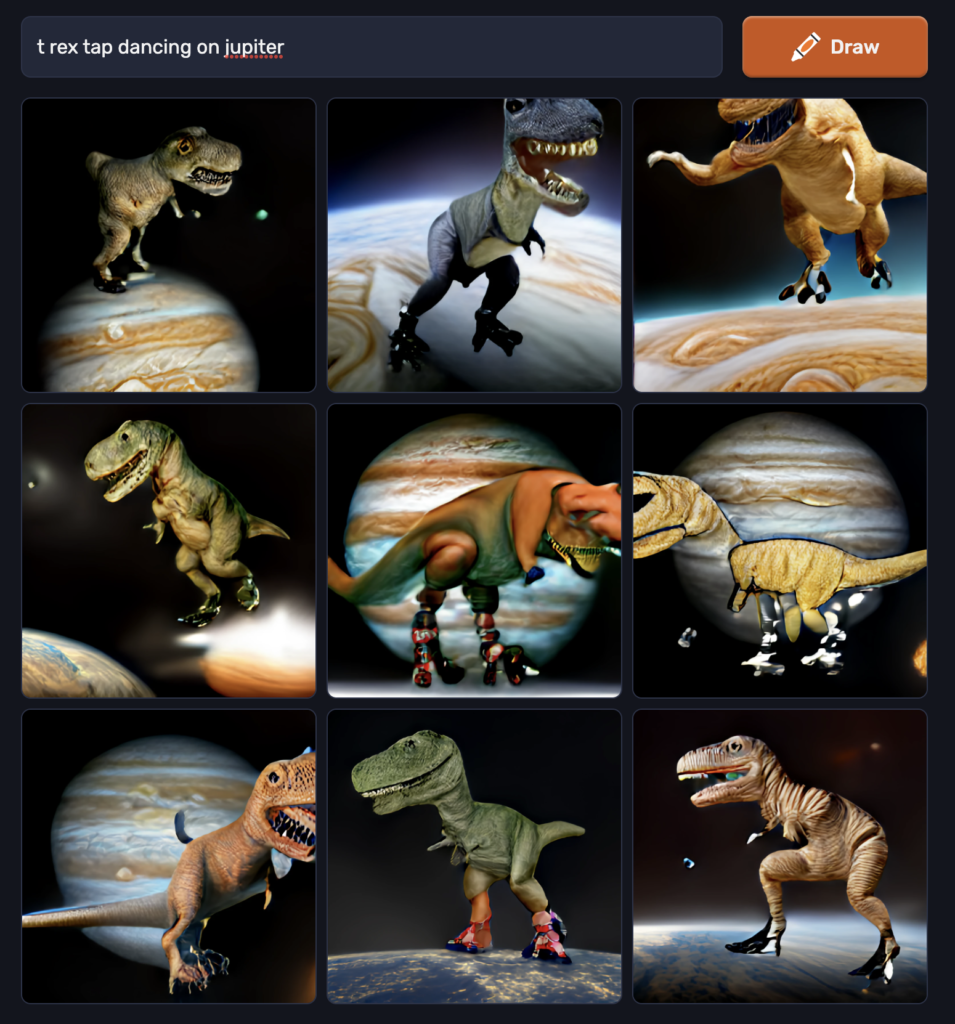

When a user enters a handful of key words, these models generate images that combine those concepts in novel ways. If you want to see a T. rex tap-dancing on Jupiter but don’t have a knack for Photoshop or charcoal, a number of different models will do the job for you, with varying degrees of quality, often in mere seconds.

Image generated using Craiyon, formerly known as DALL-E mini. Credit: Craiyon

Since this technology’s fairly recent public debut, users and onlookers have raised questions over its potential nefarious uses. The easier it is for a person to create false images designed to make people believe something that isn’t true, the greater the potential for real damage.

“You can see why this is in some ways a much more powerful technology from the point of view of creativity, but also manipulation,” compared to models that preceded these ones, said Hany Farid, a professor of computer science at the University of California, Berkeley.

Bad actors using technology to create false or misleading content is nothing new — the fact is “we’ve always been distorting reality,” said Farid, noting that Soviet dictator Joseph Stalin notoriously manipulated photographs to his benefit.

WATCH: Advances in artificial intelligence raise new ethics concerns

But there’s no doubt that the new models are a game changer. Soon enough, we’ll have similar tools able to create novel videos and audio. Amid the potential for wrongdoing — including copyright concerns — some experts note that there are practical ways image generators could be put to positive use, and others are pushing to develop a digital infrastructure that will help internet users verify what they see online.

Generative AI, meaning that which is capable of creating new content, is a rapidly advancing field. Here are the basics of how it works, and some of the ways it could be used in the not-so-distant future.

How do text-to-image AI models work?

An earlier iteration of AI-generated images relied on generative adversarial networks (GANs). Though they’ve somewhat been eclipsed by newer technology, Farid said they were “all the rage five years ago,” which he noted highlights how quickly this technology is evolving.

You can picture GANs as having a double-faced head, like the ancient Roman god Janus. On the left, there’s the generator, and on the right, the discriminator. The generator is tasked with creating an image that doesn’t actually exist — for example, a “photo” of a human face. Farid explained that it then hands that image over to the discriminator, which has access to millions of images of real human faces so it can act as a sort of fact-checker.

If the image the generator made is merely an approximation of a face — as in, the discriminator can tell it’s not the real thing — the two continue in a loop until the discriminator doesn’t note a difference between the AI-generated face and the real ones it’s seen before, Farid said. (You can check out the website thispersondoesnotexist.com for some examples of the end product.)

These GANs can create a convincing fake human face, but with limitations. The faces are always from the neck up, and users can only change a few details, such as hair and skin tone. They are also restricted to a single category, Farid explained, so you can’t place a human in the image beside a cat — the GANs can only generate one or the other based on the source images it has access to.

Newer models take a completely different approach to image creation called diffusion. Before they generate a novel image based on a user’s command, they’re trained on hundreds of millions of different images, each paired with a caption that describes it in words.

Farid explained that training involves starting with each image, breaking it down to visual noise — random pixels that don’t represent anything specific, kind of like static on an old television — and inverting the process so that the model can go from noise back to the original image.

“What the system is learning is how to start with a text prompt, a noise pattern and go back to a full image because it’s done that now a billion times,” he added.

The point of this training isn’t to give the model countless images that it can directly use to create new ones when a user gives it a text prompt. Instead, Farid explained, they serve as a kind of background instruction that allows the model to infer concepts like color, objects and artistic style.

That’s why the models can create novel images that are “semantically consistent” with the prompt, Farid said. In other words, the resulting visual matches the keywords that inspired it.

“All of the images are relevant to [the model]. Everything it’s been trained on, the whole body of it. And that’s what makes it so amazing,” he said. “It’s not cobbling together an image from a bunch of images it’s seen before — that’d be really boring.”

This novel approach makes diffusion-based models more powerful in terms of what they can create, he said. Whereas GANs could be used for more basic purposes like fake profile pictures for a social media account, the possibilities with current models — for better or for worse — are unending.

How might we use AI-generated images?

The power to create convincing images of anything you can imagine comes with obvious consequences. Users are in some cases free to create a wide range of misinformative images that could have real-world influence, or harmful ones that are sexually graphic or violent. Some models have guardrails that limit what users can create with them, but others offer very few limitations or none at all.

Another issue is that the data sets of images that underpin these models are derived from the internet, which reflects our social biases, said Anima Anandkumar, Bren Professor of Computing at California Institute of Technology and senior director of AI research at NVIDIA. She noted that because the models have captured the “underlying distribution of data” that they were trained on, they inherently reflect the essence of that information, which comes with its own “imbalances.”

“If you were to scrape all of web data, indeed there is underrepresentation of so many communities across the world and overrepresentation of certain others,” Anandkumar said. “So we would be trying to mimic that, and that comes with its own biases.”

Take the AI-powered app Lensa, for example, which in November 2022 launched a new feature dubbed “Magic Avatars” that turns real-life photos into multi-genre portraits. The feature quickly exploded in popularity, and countless social media users jumped at the opportunity to post their results. But the app has also been criticized by those who say that it copies real artists, and by users who point out that many of its portraits invoke racist and sexist stereotypes.

Anandkumar believes, though, that generative AI also has the potential for good. While it is able to generate completely new content, its counterpart — the much more common discriminative AI — is tasked with classifying and labeling content in different categories. In other words, it’s long been easier to teach a model how to tell images of a cat and a dog apart than to teach it to create new images of cats and dogs.

Anandkumar posits that generative AI can be used to fill in some of the information gaps that currently limit the capabilities of discriminative AI, allowing it to perform better particularly in cases where our limited data sets reflect social biases.

“For instance, if the data set does not have good representation on darker skin tones, but now we have really impressive generative abilities to augment that data with realistic-looking images, then we can train it to [improve real-word recognition of] a darker skin tone, so that would improve the discriminative capabilities of current models,” she added.

That could, for example, enhance a discriminative AI model’s ability to perform face recognition on a wider range of skin tones. Generative AI could similarly be used to help self-driving cars identify objects on the road, Anandkumar said, potentially improving their safety.

How can we fight misinformative AI-generated images?

Even considering the positive contributions generative AI can offer, the question of what to do about misleading content looms large. Farid pointed to the “liar’s dividend,” or the idea that people — particularly those in positions of power — could claim an image, video or even audio recording is fake without evidence, an approach that’s already been popularized by politicians worldwide.

In the fast-moving landscape of social media, he added, it doesn’t take long for false information to have a real impact.

“You put a fake video of [Jeff] Bezos saying Amazon’s profits are down 20 percent, the market’s going to move in seconds,” Farid said.

One of several potential antidotes to misinformation is being developed by the Coalition for Content Provenance and Authenticity (C2PA). It’s a multi-stakeholder organization that’s designing a new way to authenticate images and videos as they are recorded, explained Farid, who is affiliated with the group. He offered the example of being able to one day open a C2PA-compliant app on your phone that attaches your identity, location and the time of recording to the content itself, plus a digital signature of verification. If that photo or video was eventually circulated, anyone could access that information to make sure it’s legitimate.

Anandkumar noted that we’ve adapted to technology before, and although information consumption and misinformation distribution are only moving faster as time goes on, she expressed optimism that most people will continue to adjust to the changes.

“Social media or other platforms, if they don’t implement [verification tools] over time, except for maybe a small fraction of the population, the rest will migrate to those where they can consume content in a meaningful way that they can trust,” Anandkumar said.

We’re currently in the third wave of artificial intelligence technology, said Ming-Ching Chang, an associate professor of computer science at the University at Albany. The first wave was far more simplistic and rule-based, he said. The second and third waves have involved a deepening and broadening of the data that these models use to complete the tasks we design them to carry out.

Chang posits that the near future of AI will focus on improving the fairness and reliability of models for social benefit in our daily lives, offering the example of cancer detection models whose datasets must authentically reflect the population in order to be accurate and useful for everyone. The next few decades of AI development, he said, will focus on making the technology safe and accessible to the average person.

Chang compared artificial intelligence to aviation, noting that much like we looked to birds for inspiration to reach the skies, we’ve looked to our own brains as inspiration to develop AI. After decades of development, air travel is now largely safe, reliable and accessible. We’re currently in the “infant stage” of that process when it comes to artificial intelligence, which he believes will progress in an analogous way.

“I think the upcoming 50 years of AI will be to make each of these aspects — trustworthiness, fairness, privacy, security — good, and then the engineering part, the technology part, will also improve, such that it will become something usable by everybody,” Chang said. “That’s my vision.”