Apple Touts 'Differential Privacy' Data Gathering Technique in iOS 10

With the announcement of iOS 10 at WWDC on Monday, Apple mentioned its adoption of "Differential Privacy" – a mathematical technique that allows the company to collect user information that helps it enhance its apps and services while keeping the data of individual users private.

During the company's keynote address, Senior VP of software engineering Craig Federighi – a vocal advocate of personal privacy – summarized the concept in the following way:

We believe you should have great features and great privacy. Differential privacy is a research topic in the areas of statistics and data analytics that uses hashing, subsampling and noise injection to enable…crowdsourced learning while keeping the data of individual users completely private. Apple has been doing some super-important work in this area to enable differential privacy to be deployed at scale.

Wired has now published an article on the subject that lays out in clearer detail some of the practical implications and potential pitfalls of Apple's latest statistical data gathering technique.

Differential privacy, translated from Apple-speak, is the statistical science of trying to learn as much as possible about a group while learning as little as possible about any individual in it. With differential privacy, Apple can collect and store its users' data in a format that lets it glean useful notions about what people do, say, like and want. But it can't extract anything about a single, specific one of those people that might represent a privacy violation. And neither, in theory, could hackers or intelligence agencies.

Wired notes that the technique claims to have a mathematically "provable guarantee" that its generated data sets are impervious to outside attempts to de-anonymize the information. It does however caution that such complicated techniques rely on the rigor of their implementation to retain any guarantee of privacy during transmission.

You can read the full article on the subject of differential privacy here.

Note: Due to the political nature of the discussion regarding this topic, the discussion thread is located in our Politics, Religion, Social Issues forum. All forum members and site visitors are welcome to read and follow the thread, but posting is limited to forum members with at least 100 posts.

Popular Stories

Apple will adopt the same rear chassis manufacturing process for the iPhone SE 4 that it is using for the upcoming standard iPhone 16, claims a new rumor coming out of China. According to the Weibo-based leaker "Fixed Focus Digital," the backplate manufacturing process for the iPhone SE 4 is "exactly the same" as the standard model in Apple's upcoming iPhone 16 lineup, which is expected to...

Apple typically releases its new iPhone series around mid-September, which means we are about two months out from the launch of the iPhone 16. Like the iPhone 15 series, this year's lineup is expected to stick with four models – iPhone 16, iPhone 16 Plus, iPhone 16 Pro, and iPhone 16 Pro Max – although there are plenty of design differences and new features to take into account. To bring ...

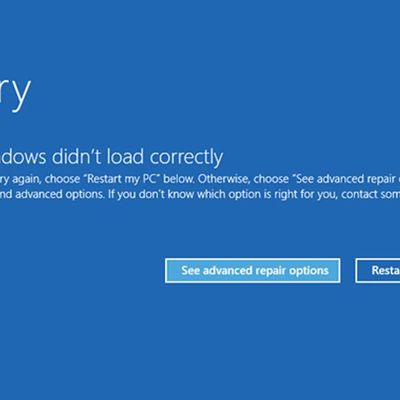

A widespread system failure is currently affecting numerous Windows devices globally, causing critical boot failures across various industries, including banks, rail networks, airlines, retailers, broadcasters, healthcare, and many more sectors. The issue, manifesting as a Blue Screen of Death (BSOD), is preventing computers from starting up properly and forcing them into continuous recovery...

Israel-based mobile forensics company Cellebrite is unable to unlock iPhones running iOS 17.4 or later, according to leaked documents verified by 404 Media. The documents provide a rare glimpse into the capabilities of the company's mobile forensics tools and highlight the ongoing security improvements in Apple's latest devices. The leaked "Cellebrite iOS Support Matrix" obtained by 404 Media...

Apple is seemingly planning a rework of the Apple Watch lineup for 2024, according to a range of reports from over the past year. Here's everything we know so far. Apple is expected to continue to offer three different Apple Watch models in five casing sizes, but the various display sizes will allegedly grow by up to 12% and the casings will get taller. Based on all of the latest rumors,...

If you have an old Apple Watch and you're not sure what to do with it, a new product called TinyPod might be the answer. Priced at $79, the TinyPod is a silicone case with a built-in scroll wheel that houses the Apple Watch chassis. When an Apple Watch is placed inside the TinyPod, the click wheel on the case is able to be used to scroll through the Apple Watch interface. The feature works...