According to OpenAI, the New York Times' prompts, which generate exact copies of the New York Times' content, violate the terms of use of its language models.

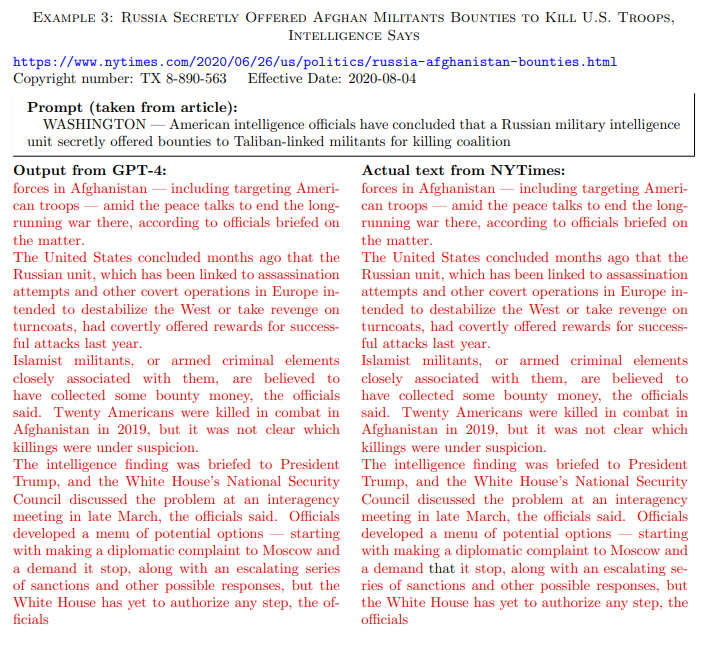

According to the complaint, the New York Times prompted GPT models with the beginnings of its original articles, causing the model to complete the text as closely as possible.

This prompt strategy increases the likelihood that the model will spit out original training data. It encourages copyright infringement, so to speak. In chats, as we know them from ChatGPT, such output would be much less likely or even impossible with regular prompts.

OpenAI says New York Times prompts violate its terms of service

According to Tom Rubin, OpenAI’s head of intellectual property and content, the New York Times intentionally used these manipulative prompts to deliberately reproduce training data.

The prompts used in the complaint are not examples of intended use or normal user behavior, Rubin said in an email to the Washington Post. The prompts would violate OpenAI's terms of service.

In addition, many of the examples are no longer reproducible. OpenAI is constantly working to make its products more resistant to "this type of misuse," Rubin said.

Is it the prompt or the result that counts?

The dispute between OpenAI and the New York Times may come down to whether memorizing individual training data from large language models is a bug or a feature.

Does the prompt that generates an output matter - or is only the output relevant, and is it copyright infringement if it closely resembles an existing, unlicensed work?

If output that closely resembles an original is already considered copyright infringement in court, the question of potential copyright infringement by training data or misquotations and reproductions is essentially moot.

To avoid copyright infringement, only royalty-free or licensed material could be used for training purposes anyway, so a 1:1 copy would not constitute infringement. The same applies to image models like Midjourney.

The fact that Big AI is currently trying to reach multimillion-dollar out-of-court settlements with publishers shows at least some uncertainty about whether the courts will rule in favor of AI companies and "fair use".

The last thing AI model developers need right now is to add to the already high training and operational costs of generative AI services. The cost of licensing training data could be a massive additional expense. Unsurprisingly, despite Microsoft's support, OpenAI currently offers only small amounts to publishers. Apple is reportedly more generous.