This article is part of our coverage of the latest in AI research.

AI agents are showing impressive capabilities in tackling real-world tasks by combining large language models (LLM) with tools and multi-step pipelines. LLM agents might one day be able to perform complex tasks autonomously with little or no human oversight.

However, a new paper by researchers at AIWaves points out that the current landscape of language agent research is primarily “model-centric” or “engineering-centric,” requiring substantial manual efforts from experts to design prompts, tools, and pipelines for specific tasks.

This limits the ability of language agents to autonomously learn and evolve from data. The researchers propose “agent symbolic learning,” a framework that enables language agents to optimize themselves on their own. According to their experiments, symbolic learning can create “self-evolving” agents that can automatically improve after being deployed in real-world settings.

The limitations of current agentic systems

AI agents extend the functionality of LLMs by enhancing them with external tools and integrating them into systems that perform multi-step workflows.

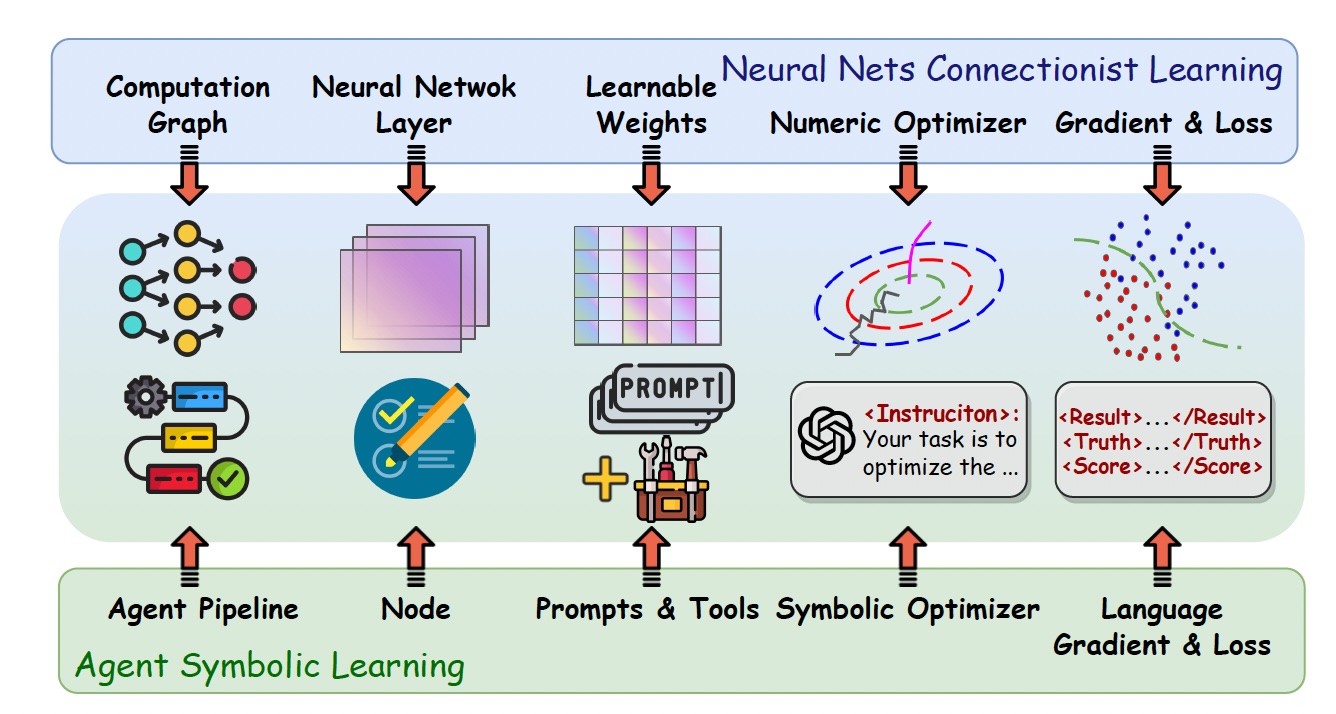

“In a sense, language agents can be viewed as AI systems that connect connectionism AI (i.e., the LLM backbone of agents) and symbolism AI (i.e., the pipeline of prompts and tools), which partially explains their effectiveness in real-world problem-solving scenarios,” the researchers write.

However, developing AI agents for specific tasks involves a complex process of decomposing tasks into subtasks, each of which is assigned to an LLM node. Researchers and developers must design custom prompts and tools (e.g., APIs, databases, code executors) for each node and carefully stack them together to accomplish the overall goal. The researchers describe this approach as “model-centric and engineering-centric” and argue that it makes it almost impossible to tune or optimize agents on datasets in the same way that deep learning systems are trained.

In effect, this means that adapting agents to new tasks and distributions requires a lot of engineering effort.

“We believe the transition from engineering-centric language agents development to data-centric learning is an important step in language agent research,” the researchers write.

Existing optimization methods for AI agents are prompt-based and search-based, and have major limitations. Search-based algorithms work when there is a well-defined numerical metric that can be formulated into an equation. However, in real-world agentic tasks such as software development or creative writing, success can’t be measured by a simple equation. Second, current optimization approaches update each component of the agentic system separately and can get stuck in local optima without measuring the progress of the entire pipeline. Finally, these techniques can’t add new nodes to the pipeline or implement new tools.

“This resembles the early practice in training neural nets where layers are separately optimized and it now seems trivial that optimizing neural nets as a whole leads to better performance,” the researchers write. “We believe that this is also the case in agent optimization and joint optimization of all symbolic components within an agent is the key for optimizing agents.”

The agent symbolic learning framework

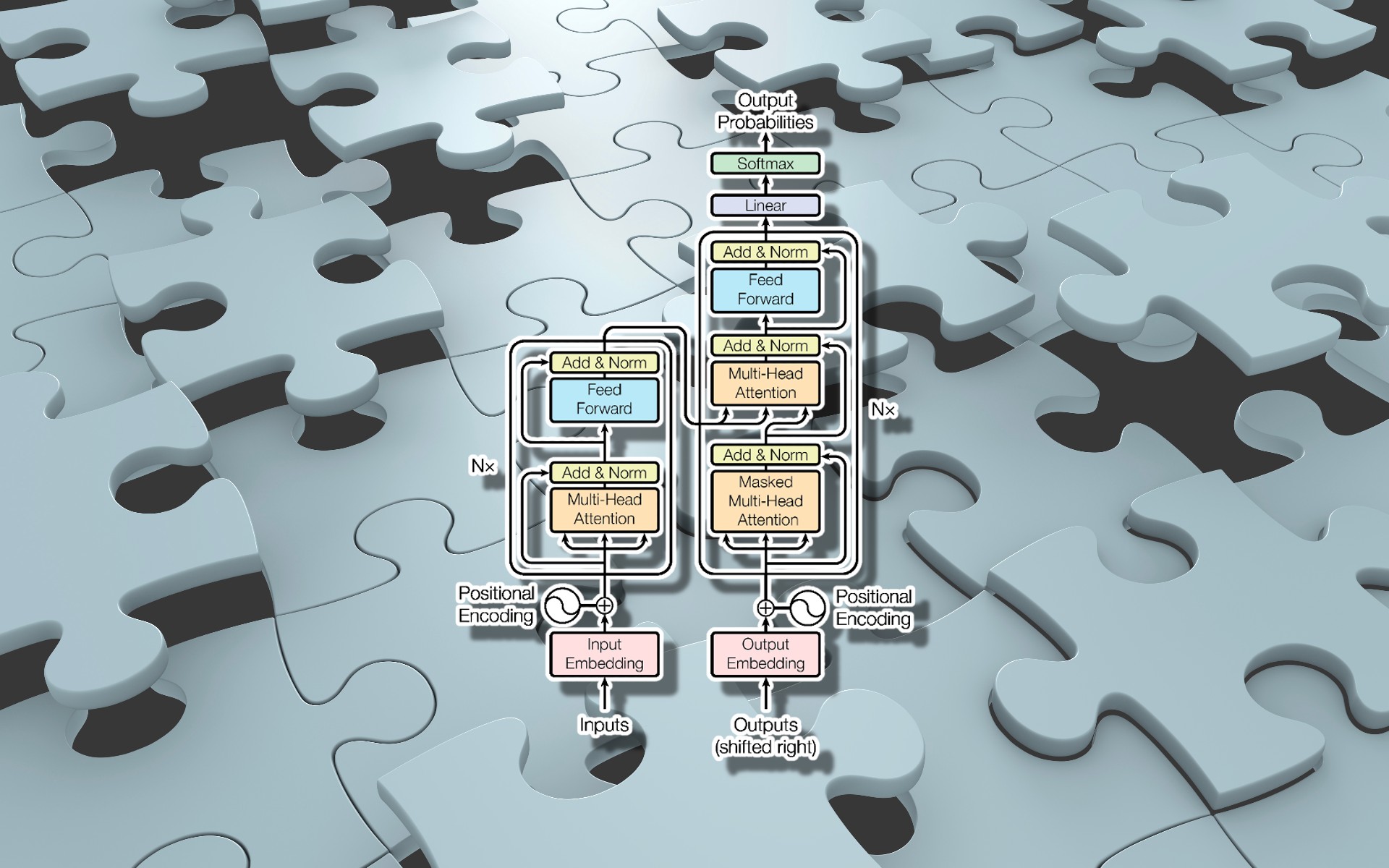

To address these limitations, researchers propose the “agent symbolic learning” framework, inspired by the learning procedure used for training neural networks.

“We make an analogy between language agents and neural nets: the agent pipeline of an agent corresponds to the computational graph of a neural net, a node in the agent pipeline corresponds to a layer in the neural net, and the prompts and tools for a node correspond to the weights of a layer,” the researchers write.

The agent symbolic learning framework implements the main components of connectionist learning (backward propagation and gradient-based weight update) in the context of agent training using language-based loss, gradients, and weights.

The framework starts with a “forward pass” in which the agentic pipeline is executed for an input command. The forward pass is almost identical to standard agent execution. The main difference is that the learning framework stores the input, prompts, tool usage, and output to the trajectory, which are used in the next stages to calculate the gradients and perform back-propagation.

The framework then uses a “prompt-based loss function” to evaluate the outcome and calculate a “language loss.” The researchers designed a prompt template for language loss computation that includes a task description, input, trajectory, few-shot demonstrations, principles, and output format control.

The task description, input, and trajectory are data-dependent, which means they will be automatically adjusted as the pipeline gathers more data. The few-shot demonstrations, principles, and output format control are fixed for all tasks and training examples. The language loss consists of both natural language comments and a numerical score, also generated via prompting.

Next, the system back-propagates the language loss from the last to the first node along the trajectory, resulting in textual analyses and reflections for the symbolic components within each node. These reflections are called language gradients.

In standard deep learning, back-propagation calculates gradients to measure the impact of the weights on the overall loss so that the optimizers can update the weights accordingly. In the agent symbolic learning framework, language gradients play a similar role.

The framework uses “symbolic optimizers” to update all symbolic components in each node and their connections based on the language gradients. Symbolic optimizers are carefully designed prompt pipelines that can optimize the symbolic weights of an agent. The researchers created separate optimizers for prompts, tools, and pipelines.

This top-down scheme enables the agent symbolic learning framework to optimize the agent system “holistically” and avoid getting stuck in local optima for separate components.

“The agent symbolic learning framework is an agent learning framework that mimics the standard connectionist learning procedure,” the researchers write. “In contrast to existing methods that either optimize single prompt or tool in a separate manner, the agent symbolic learning framework jointly optimizes all symbolic components within an agent system.”

Agent symbolic learning in action

The researchers ran experiments on standard benchmarks for LLMs including HotpotQA, a challenging question-answering dataset; MATH, a collection of challenging competition mathematics problems, and HumanEval, a programming evaluation set that requires LLMs or agents to synthesize programs from docstrings.

If you enjoyed this article, please consider supporting TechTalks with a paid subscription (and gain access to subscriber-only posts)

They compared their method against popular baselines, including prompt-engineered GPTs, plain agent frameworks, the DSpy LLM pipeline optimization framework, and an agentic framework that automatically optimizes its prompts.

According to their findings, the agent symbolic learning framework consistently outperformed other methods.

“Our results demonstrate the effectiveness of the proposed agent symbolic learning framework to optimize and design prompts and tools, as well as update the overall agent pipeline by learning from training data,” the researchers write.

The researchers also tested the framework on complex agentic tasks such as creative writing and software development. This time, their approach outperformed all compared baselines on both tasks with an even larger performance gap compared to that on conventional LLM benchmarks.

“Interestingly, our approach even outperforms tree-of-thought, a carefully designed prompt engineering and inference algorithm, on the creative writing task,” the researchers write. “We find that our approach successfully finds the plan, write, and revision pipeline and the prompts are very well optimized in each step.”

To facilitate future research on data-centric agent learning, the researchers have open-sourced the code and prompts used in the agent symbolic learning framework.

“We believe this transition from model-centric to data-centric agent research is a meaningful step towards approaching artificial general intelligence,” the researchers write.