Retrieval augmented generation (RAG) stands as a crucial tool in using large language models (LLM). RAG enables LLMs to incorporate external documents into their responses, thereby aligning more closely with user requirements. This feature is particularly beneficial in areas where LLMs traditionally falter, especially when factuality is important.

Since the advent of ChatGPT and similar LLMs, a plethora of RAG tools and libraries have emerged. Here is what you need to know about how RAG works and how you can get started using it with ChatGPT, Claude, or an LLM of your choice.

The benefits of RAG

When you interact with a large language model, it draws upon the knowledge embedded in its training data to formulate a response. However, the vastness of the training data often surpasses the model’s parameters, leading to responses that may not be entirely accurate. Moreover, the diverse information used in training can cause the LLM to conflate details, resulting in plausible yet incorrect answers, a phenomenon known as “hallucinations.”

In some instances, you might want the LLM to use information not encompassed in its training data, such as a recent news article, a scholarly paper, or proprietary company documents. This is where retrieval augmented generation comes into play.

RAG addresses these issues by equipping the LLM with pertinent information before it generates a response. This involves retrieving (hence the name) documents from an external source and inserting their contents into the conversation to provide context to the LLM.

This process enhances the model’s accuracy and enables it to formulate responses based on the provided content. Experiments show that RAG significantly curtails hallucinations. It also proves beneficial in applications requiring up-to-date or customer-specific information not included in the training dataset.

To put it simply, the difference between a standard LLM and a RAG-enabled LLM can be likened to two individuals answering questions. The former is like a person responding from memory, while the latter is someone provided with documents to read and answer questions based on their content.

How RAG works

RAG operates on a straightforward principle. It identifies one or more documents pertinent to your query, incorporates them into your prompt, and modifies the prompt to include instructions for the model to base its responses on these documents.

You can manually implement RAG by copy-pasting a document’s content into your prompt and instructing the model to formulate responses based on this document.

A RAG pipeline automates this process for efficiency. It begins by comparing the user’s prompts with a database of documents, retrieving those most relevant to the topic. The pipeline then integrates their content into the prompt and adds instructions to ensure the LLM adheres to the document’s content.

What do you need for a RAG pipeline?

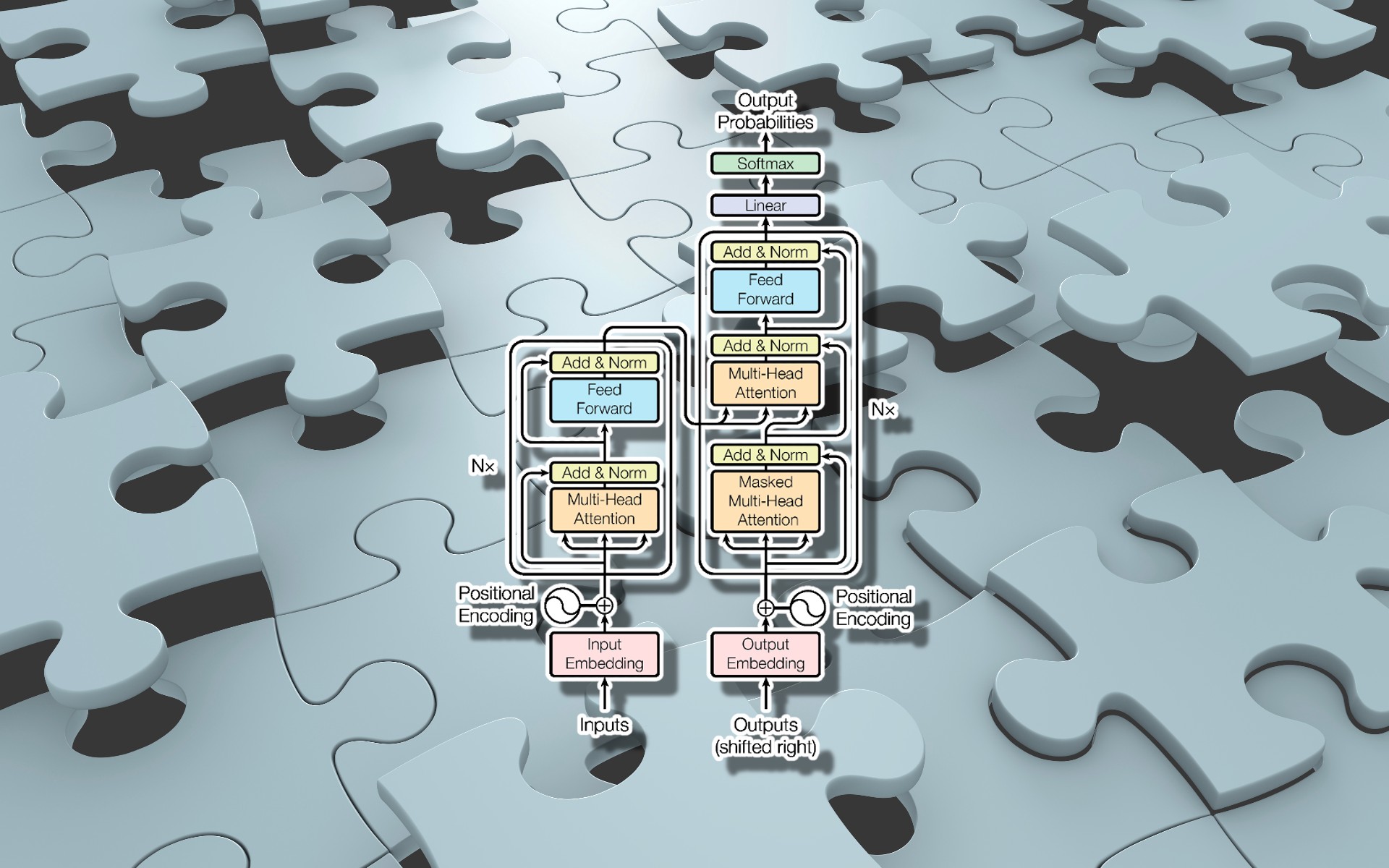

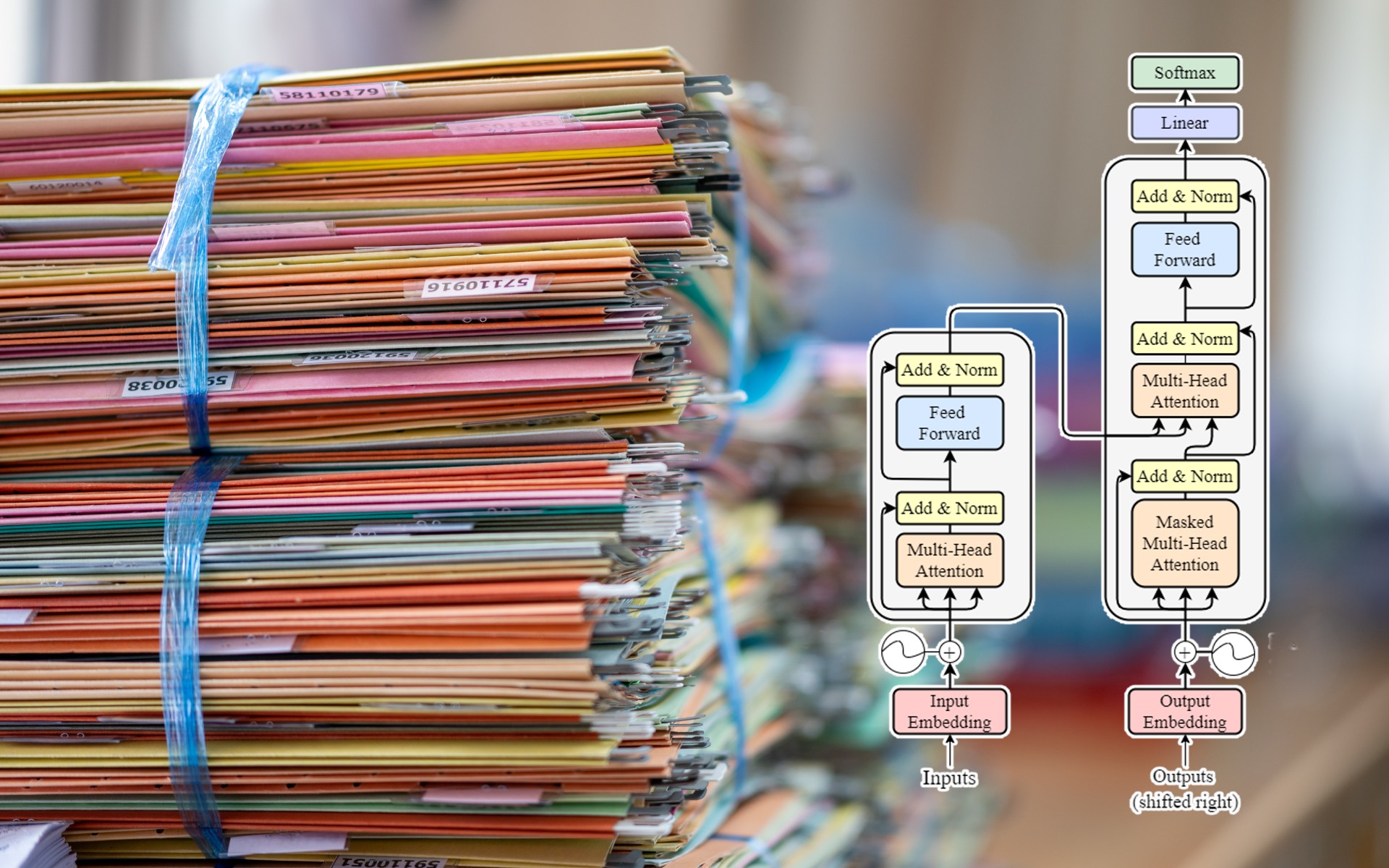

While retrieval augmented generation is an intuitive concept, its execution requires the seamless integration of several components.

Firstly, you need the primary language model that generates responses. Alongside this, an embedding model is necessary to encode both documents and user prompts into numerical lists, or “embeddings,” which represent their semantic content.

Next, a vector database is required to store these document embeddings and retrieve the most relevant ones each time a user query is received. In some cases, a ranking model is also beneficial to further refine the order of the documents provided by the vector database.

For certain applications, you might want to incorporate an additional mechanism that segments the user prompt into several parts. Each of these segments requires its own unique embedding and documents, enhancing the precision and relevance of the responses generated.

How to get started with RAG with no code

LlamaIndex recently released an open-source tool that allows you to develop a basic RAG application with almost no coding. While currently limited to single-file use, future enhancements may include support for multiple files and vector databases.

The project, named RAGs, is built on the Streamlit web application framework and LlamaIndex, a robust Python library particularly beneficial for RAG. If you’re comfortable with GitHub and Python, installation is straightforward: simply clone the repository, run the install command, and add your OpenAI API token to the configuration file as specified in the readme document.

RAGs is currently configured to work with OpenAI models. However, you can modify the code to use other models such as Anthropic Claude, Cohere models, or open-source models like Llama 2 hosted on your servers. LlamaIndex supports all these models.

The initial run of the application requires you to set up your RAG agent. This involves determining the settings, including the files, the size of chunks you want to break your file into, and the number of chunks to retrieve for each prompt.

Chunking plays a crucial role in RAG. When processing a large file, like a book or a multi-page research paper, it’s necessary to break it down into manageable chunks, such as 500 tokens. This allows the RAG agent to locate the specific part of the document relevant to your prompt.

After completing these steps, the application creates a configuration file for your RAG agent and uses it to run the code. RAGs serves as a valuable tool to begin with retrieval augmentation and build upon. You can find the full guide here.

Or just upload docs as txt on the openai platform and use their inbuilt pipeline.

What is the value of RAG, now that we have custom GPTs?

When hosting your own opoen source LLM